Internet companies publish different types of data and metrics in their transparency reports. The following list is not exhaustive, but identifies the most commonly-disclosed reporting categories across the industry:

- Legal Information Requests

- Legal Content Takedown Requests

- Policy Enforcement

- Intellectual Property Enforcement

Legal Information Requests

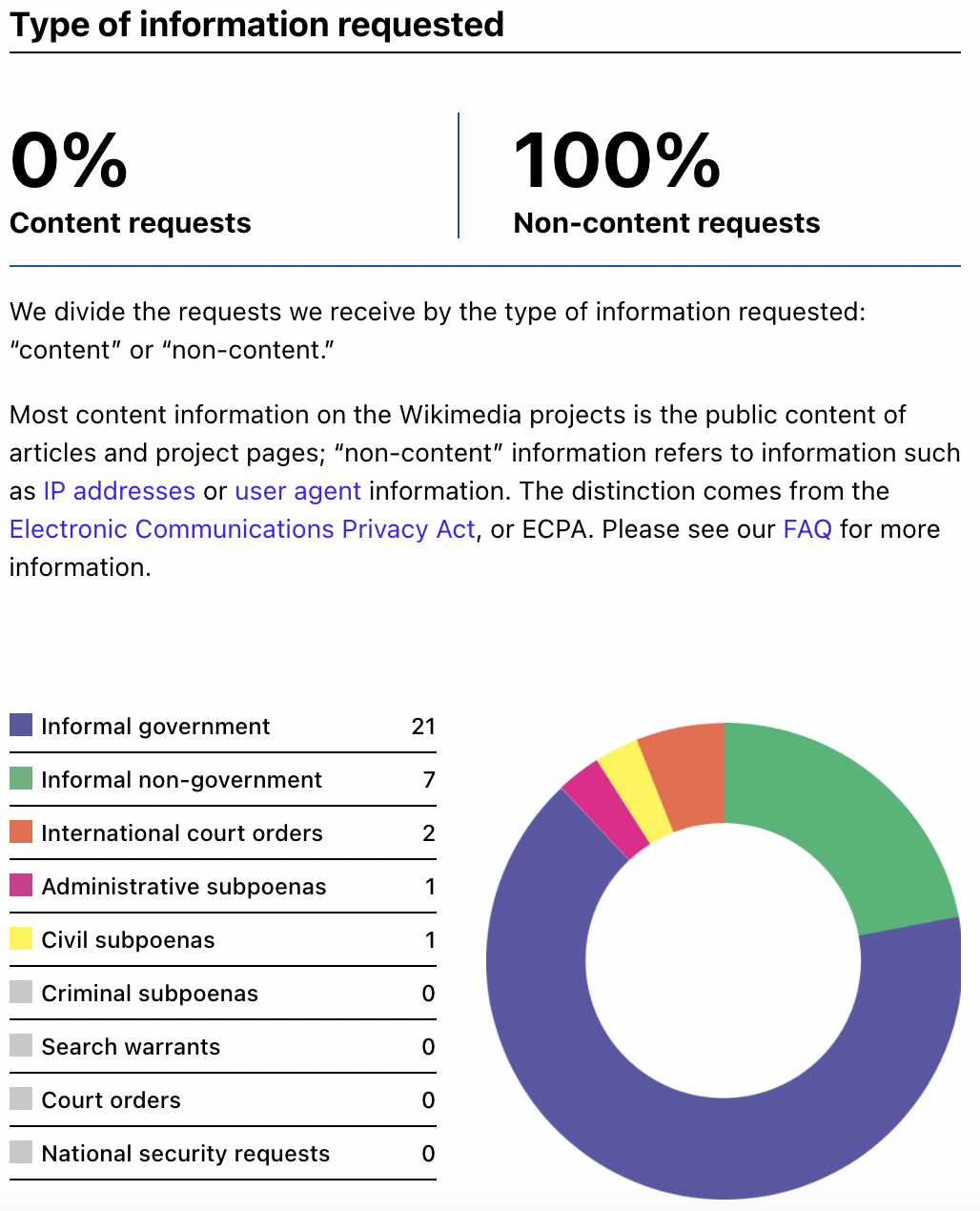

Transparency reports on legal requests for user information contain data and metrics on the requests received from government, law enforcement, and other third parties, usually in the course of a criminal, civil, or national security investigation. Legal information requests almost always ask for “non-content” information such as basic subscriber information (i.e., user’s email address), but sometimes they also ask for “content” information (i.e., user’s messages, posts, photos, etc). Before a company discloses this information, it will review the request to evaluate whether it is legally valid. Requests that ask for user content will often have more stringent requirements before companies can disclose such information. Companies may also receive emergency disclosure requests; these non-binding requests are submitted by law enforcement in urgent situations where the information in question is necessary to mitigate an imminent risk of death or physical injury. In this scenario, companies may voluntarily disclose the minimum amount of information necessary to avert the potential harm, but usually require that the law enforcement agency provide the proper documentation within a reasonable timeframe after the crisis has been resolved in order to show that the request was deemed legally valid.

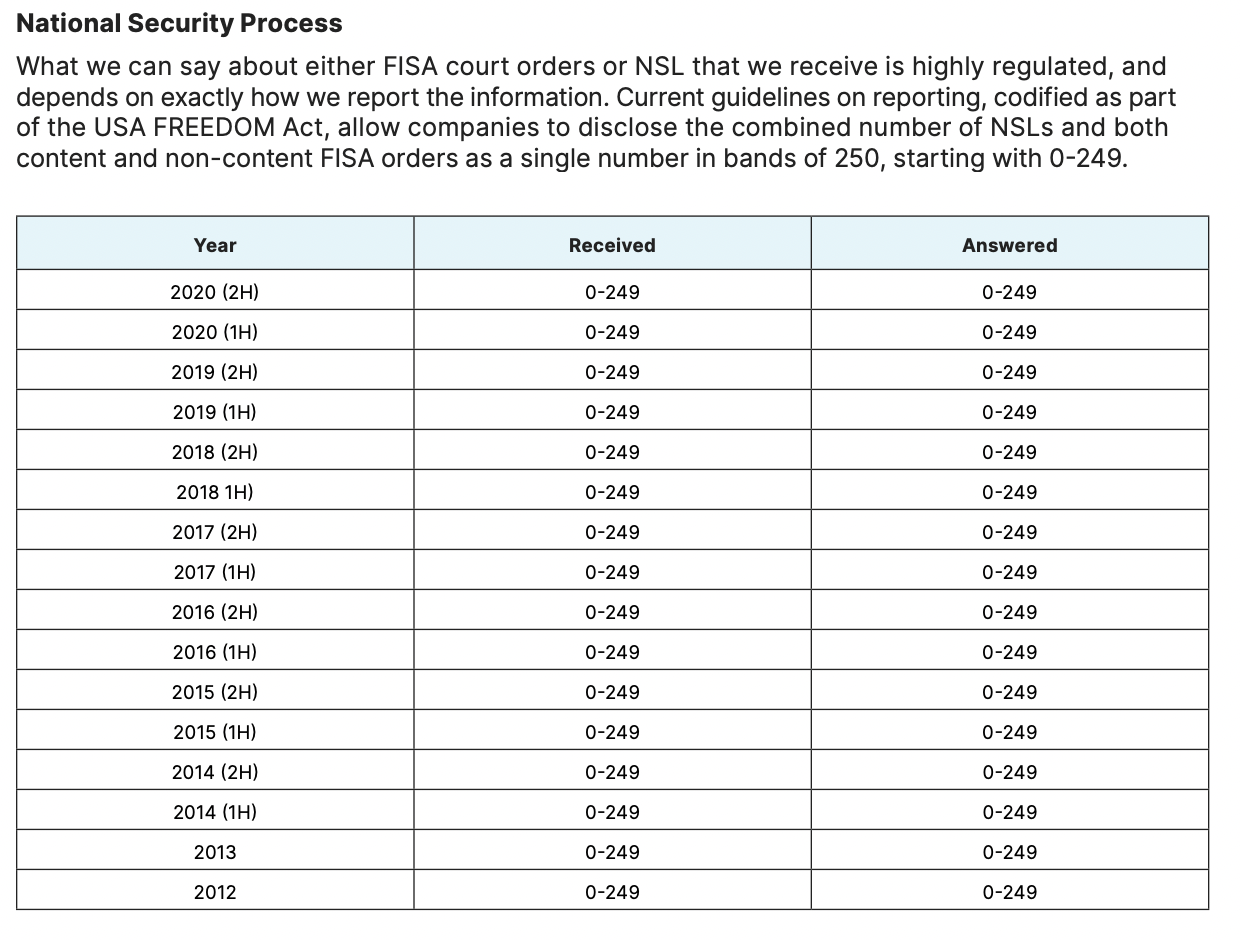

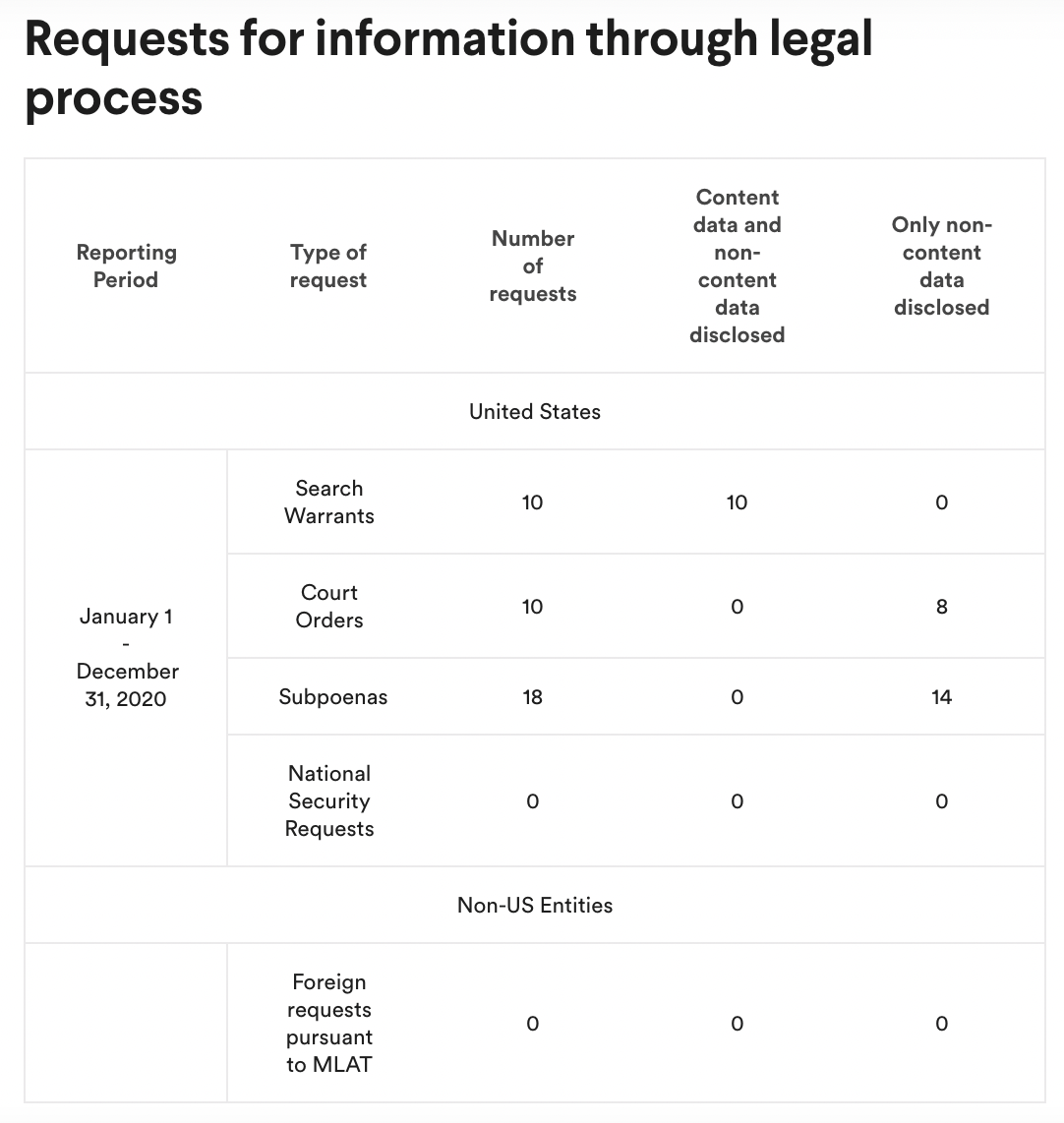

At their most fundamental level, transparency reports on legal requests for user information typically provide the following metrics for a given period: how many disclosure requests were received, how many users were the subject of those requests, and how many of those requests were complied with (i.e., some or all information was disclosed). The report will also usually segment the data by the different legal process types received (i.e., search warrants, subpoenas, etc.) and from which country the request originated (i.e., United States, India). The most basic form of reporting in this category includes metrics on government requests on criminal matters, which are often the highest volume, but some reports also produce metrics on civil, non-government requests. Companies may also publish data on government preservation requests, which are requests to temporarily preserve information from a specified account until a valid legal process is issued. Although it varies from company to company, a preservation is generally a snapshot of the account and its information at a certain point in time, and that information is preserved, unchanged, for a limited period of time even if the user changes or deletes content in the account. For companies based in the United States, it is also common to report on national security requests, including metrics on both Foreign Intelligence Surveillance Act (FISA) court orders and National Security Letters (NSLs). Unlike other transparency reporting categories, U.S. law places specific restrictions on how, what, and when companies can report national security requests in their transparency reports.

Examples of Transparency Reports and the Types of Metrics Reported

The transparency report on legal requests for user information is an important part of the public debate around government surveillance and digital privacy. Some recent examples of how the data in transparency reports have supported this debate include the reports on increasing volume of U.S. national security demands and corporate non-compliance with the Hong Kong National Security Law.

Example of Requests for Information Transparency Reports

- Apple, Government Requests Transparency Report

- Facebook, Government Requests for User Data Transparency Report

- Wikimedia Foundation, Transparency Report 2020

More Information

- The Transparency Reporting Toolkit, Berkman Klein Center at Harvard University and Open Technology Institute

- Way of a Warrant, Google (Youtube Video)

Legal Content Takedown Requests

Transparency reports on legal content takedown requests include data and metrics on the requests received by internet companies to alter or remove content that allegedly violates a local law. These requests can come from governments, law enforcement agencies, judges, lawyers, and even private citizens. Companies may respond to such requests by removing the content globally for a policy violation, or by “geo-blocking” content, which means that its availability will be restricted in the country where that content allegedly violates local law.

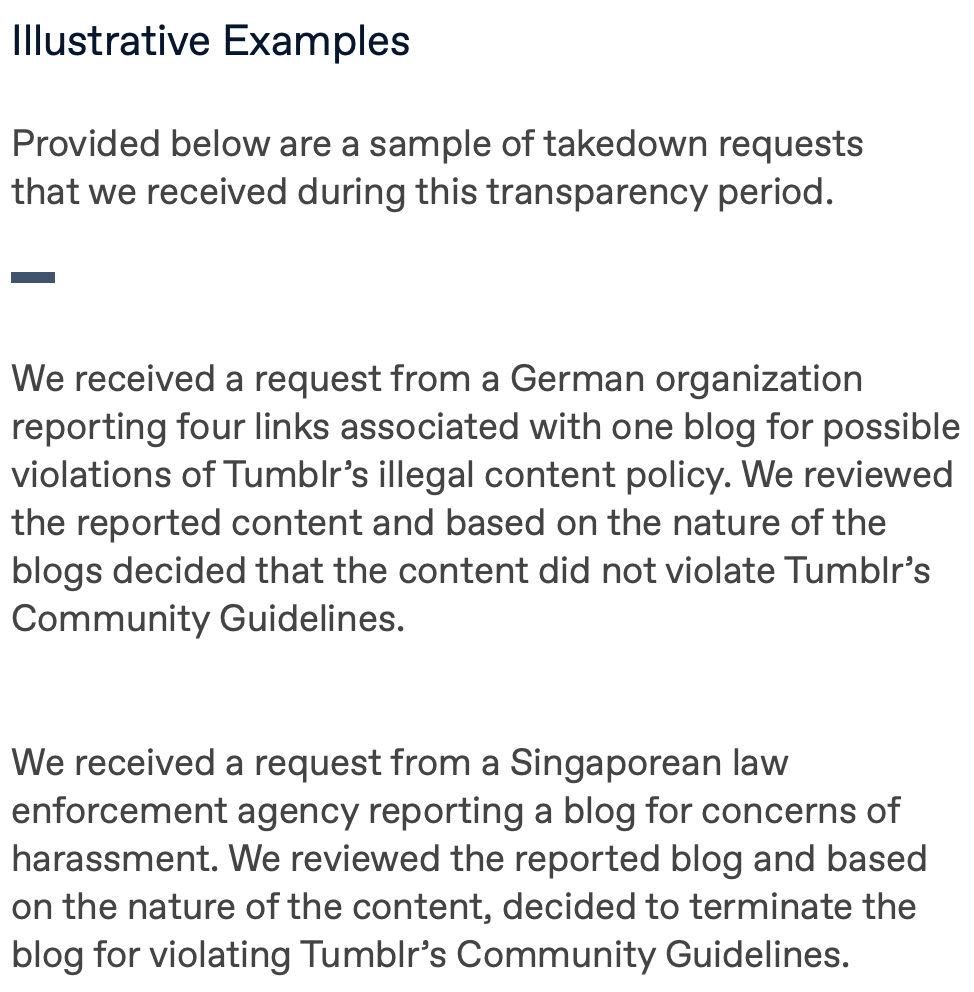

At a minimum, transparency reports for this category disclose the number of takedown requests received from each country and the number of accounts or pieces of content actioned as a result of those requests during a given time period. Although companies do not generally divulge the details of legal takedown requests to the public, some have started providing high-level information about requests that may be of public interest.

Examples of Transparency Reports Showing Government Takedown Requests

Sometimes, local law aligns with a company’s own content policies; for example, while child sexual abuse material (CSAM) is illegal in the vast majority of countries, this content also violates the policies of all mainstream internet companies. Thus, if a company receives a legal takedown request for child sexual abuse material, they may remove it globally and categorize it as a platform policy violation rather than as a legal takedown request. Outcomes like these are not always reflected in transparency reports, although some companies choose to report them as a separate “content policy violation” category alongside the legal takedown requests category.

By contrast, it is not unusual for local laws to prohibit content or behavior that is otherwise acceptable according to a company’s policies; for example, Thai law prohibits criticism of the Thai monarchy, even though most companies permit their users to engage in such discussion. In these cases, companies may respond to such takedown requests by “geo-blocking” content. This means that the content will not be available in the country where it allegedly violates local law, but will remain available to users in all other locations.

Example of a Transparency Report that Shows Content that Violated Community Guidelines or Local Laws

Many legal takedown requests are relatively uncontroversial; for example, government takedown requests often include the unauthorized sale of regulated goods or accounts impersonating government officials. However, internet companies also frequently receive takedown requests from governments and others aimed at censoring legitimate online speech under the guise of legal violations. The legal content takedown section of a transparency report is therefore critical to the public’s ability to monitor government threats to digital free expression, in addition to corporate compliance with those threats.

Example of Legal Content Takedown Requests Transparency Reports

- Google, Government Requests to Remove Content Transparency Report

- Microsoft, Content Removal Requests Report

More Information

Policy Enforcement

Transparency reports on policy enforcement include data and metrics about the moderation and enforcement actions taken according to the company’s own policies. Content policies (also referred to as Community Standards or Community Guidelines) explain the types of content or behavior that a company prohibits, and often also explain the remediation methods when a user breaks those rules. You can read more about content policy in the Creating and Enforcing Policy chapter.

The transparency report on policy enforcement includes metrics such as:

- How many pieces of content, and associated accounts, were removed or otherwise enforced against for violating a company’s policies?

- How many pieces of content were reported by users (regardless of whether the content was removed)?

- How many users reported content that was later removed?

- How much violating content was removed before a user ever reported it?

- How long was violating content available on the product before it was removed?

- How many users have asked the company to reconsider a moderation decision?

- How often was the original moderation decision overturned after a second review?

The report may also segment the data by different violation types (e.g., spam, violence), how the violation was detected (e.g., automation, user reporting), and product type. While content policies vary widely across companies, there are several common categories of violations that are generally shared and reported on within transparency reports, such as child safety and hate speech.

Example of a Transparency Report that Shows Content which was Proactively Removed

Policy enforcement is a relatively new and emerging category of transparency reporting, and companies continue to innovate and expand upon the types of data and metrics they release. While metrics on the volume and outcomes of enforcement actions set an important foundation for this reporting category, there is a growing push for companies to publish metrics that measure how effective those enforcement actions truly are at preventing harm or bad experiences. Presenting contextual metrics and information alongside removal numbers helps readers understand and evaluate the effectiveness of a company’s efforts to protect its users. Examples of contextual metrics include the “prevalence” metric released by Facebook, the “violating view rate” released by YouTube, and the “reach of policy-violating Pins” metric released by Pinterest, all of which use different methods to measure user exposure to violating content. Metrics like these can be challenging to generate, but act as an important accountability mechanism that incentivizes companies to continuously improve the true effectiveness of their policies and enforcement systems.

The online enforcement decisions made by a company often have offline impact. As a result, transparency reporting on policy enforcement provides critical insight into how companies think about moderation decisions on their platforms that subsequently influence real-world events, such as Twitter’s decision to suspend the account of former U.S. president Donald Trump for violations of its “Glorification of Violence” policy. Because these actions can have a profound impact on free speech and expression on the internet, companies should provide transparency into these policies and their enforcement. With the increasing significance of and public attention on platform policy enforcement, transparency reporting on policy enforcement will almost certainly become mandatory in the European Union and may become so in the United States as well.

Example of Policy Enforcement Transparency Reports

- Facebook, Community Standards Enforcement Report

- Twitter, Rules Enforcement Transparency Report

- Reddit, Annual Transparency Reports

- Twitch, Transparency Report

More Information

- Santa Clara Principles on Transparency and Accountability in Content Moderation

- The Transparency Reporting Toolkit, Content Takedown Reporting, Open Technology Institute

Intellectual Property Enforcement

Transparency reports on intellectual property include data and metrics on takedown notices for alleged intellectual property law infringement. Copyright, trademark, and other forms of intellectual property claims are the subject of particular attention, in large part because of the special way they are treated under U.S. law, as well as the comparatively high volume of takedown notices they can generate. The most common type of intellectual property transparency reporting contains data on copyright infringement takedown notices and counter-notices laid out by the Digital Millennium Copyright Act (“DMCA”). There is little standardization when it comes to the data in intellectual property transparency reports, but common metrics include: the number of takedown requests received, the number of pieces of content removed, and the removal rate. An example of a recent innovation in the type of metrics reported for this category include Facebook’s proactive enforcement metric for intellectual property violations; this metric captures how much violating content was detected and removed before it was ever reported by the copyright holder. This transparency report might also identify which organizations filed the most takedown notices, or present examples of how the company responded to specific notable claims.

Example of a Transparency Report that Shows Intellectual Property Take Down Notices

Intellectual property transparency reports represent an important tool for internet companies to demonstrate how they handle intellectual property laws, combat intellectual property infringement, and push back against false claims that threaten free and lawful speech.

Example Reports

More Information